Custom Resource Definitions (CRDs) in Kubernetes, will soon abstract away every cloud provider, and therefore become the single interface of every developer to the cloud.

Lets elaborate on this, by first illustrating what the development workflow in the cloud looks like today, and then explaining how CRDs can greatly improve this process.

Developing a cloud-ready application often involves a lot of context and tool switching. Imagine creating a simple dashboard application which displays information from a database. As persistence layers today are still best kept outside of Kubernetes, the database is most often chosen from the service offerings of a cloud provider. It can then be provisioned via infrastructure as code (IAC) tools like Terraform, or by simply clicking your way through the cloud dashboard. In a worst-case scenario even a ticket has to be created, for the operations team to pick up the request and to provision the database solution. Before the final application can then be deployed, secrets have to get pushed to the Kubernetes cluster, for accessing the database from the application. Afterwards the dashboard application can finally be deployed and can connect to the database. To access the dashboard a DNS entry has to be created, which should point to the ingress of the application inside Kubernetes. This again can require switching to a different tool to create the necessary records.

This small example already shows the different tools and mechanisms involved, when developing and deploying a simple application. This can get frustrating very quickly, as a lot of time can get lost context-switching, when focusing on different ways to work with multiple interfaces to get the job done.

So how would the ideal development workflow look like? As Kubernetes already changed the way we run applications in the cloud, and a lot of developers are therefore in touch with deployment yaml files anyways, it could be feasible to define our database, caching-layer, DNS records etc. via Kubernetes yaml files as well. Imagine a world where all your infrastructure dependencies are defined exactly like the application deployments and are magically launched in the best cloud for the respective infrastructure solution.

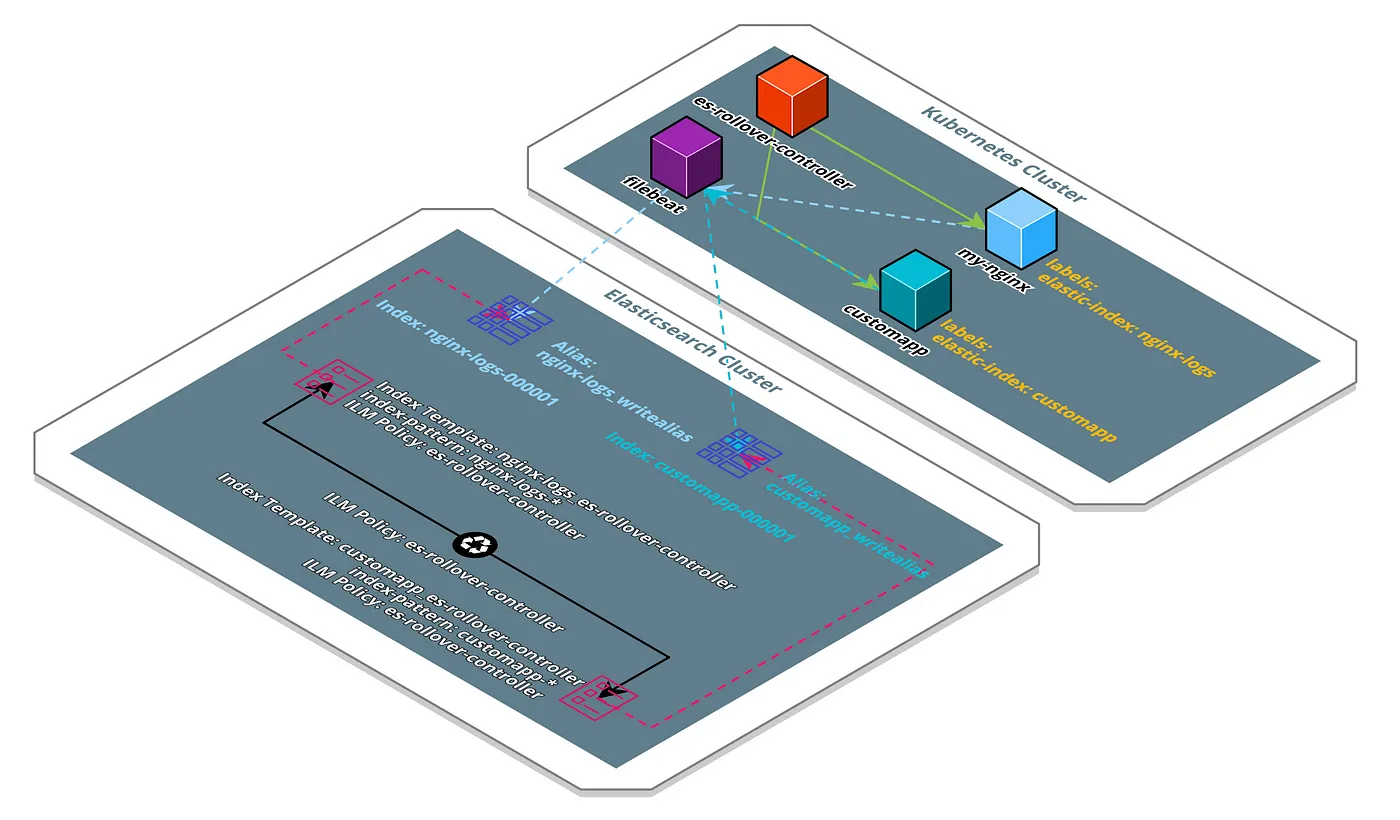

Kubernetes CRDs can realize this exactly. They enable us to extend the Kubernetes API with custom resources, which can then be used to define the desired state of an object (e.g. a database as a service from a cloud provider with X amount of storage, Y amount of cpu and Z amount of RAM). Additionally a controller is deployed to a cluster, which handles the actual logic of the resource life-cycle and ensures that the actual state converges into the desired state of the defined resource. Once a CRD is deployed to the cluster, it can be interacted with just like any other Kubernetes resource. This enables a completely seamless integration.

So what is the current state of CRDs and what kind of controllers are already available? Kubernetes 1.10 just added a lot of new features and improvements for CRDs, like the scale and status sub-resources. This shows a clear push for more production-ready CRDs, so we can expect a lot more development in this direction. Recently I also started writing my first very basic controller, which allows the provisioning of S3 buckets on AWS. You can take a look at the WIP over at gitlab, to get a glance of how a controller can look like.

So what ready to use controllers are already available? Currently most of them are in beta or still under heavy development. Also there is a lack of official CRDs provided by the cloud providers. However, there is already a great collection of controllers from the open source community, which hint at the great future of CRDs.

https://www.redhat.com/en/blog/introducing-operator-framework-building-apps-kubernetes

Coreos recently introduced the operator-framework, which provides basic building blocks for writing controllers that are maintaining resources within Kubernetes. Additionally they maintain a list of awesome operators, which is a great resource for discovering well-designed controllers. Keep in mind that most of them launch and control resources inside Kubernetes, when sometimes it might be more beneficial to control a database as a service from the cloud provider of your choice. The RDS controller is a good example of this use-case, as it can launch and operate RDS instances in AWS via the Kubernetes API.

Imagine a future where CRD controllers are maintained by the cloud providers themselves, to provide a robust mechanism for launching resources via Kubernetes.

This could truly change the way we interact with the cloud. Today this is still a look into the future, but until we are there, lets write some awesome controllers ourselves and keep a close look at the awesome-operator repository.